Context Engineering for Agents

By Lance Martin

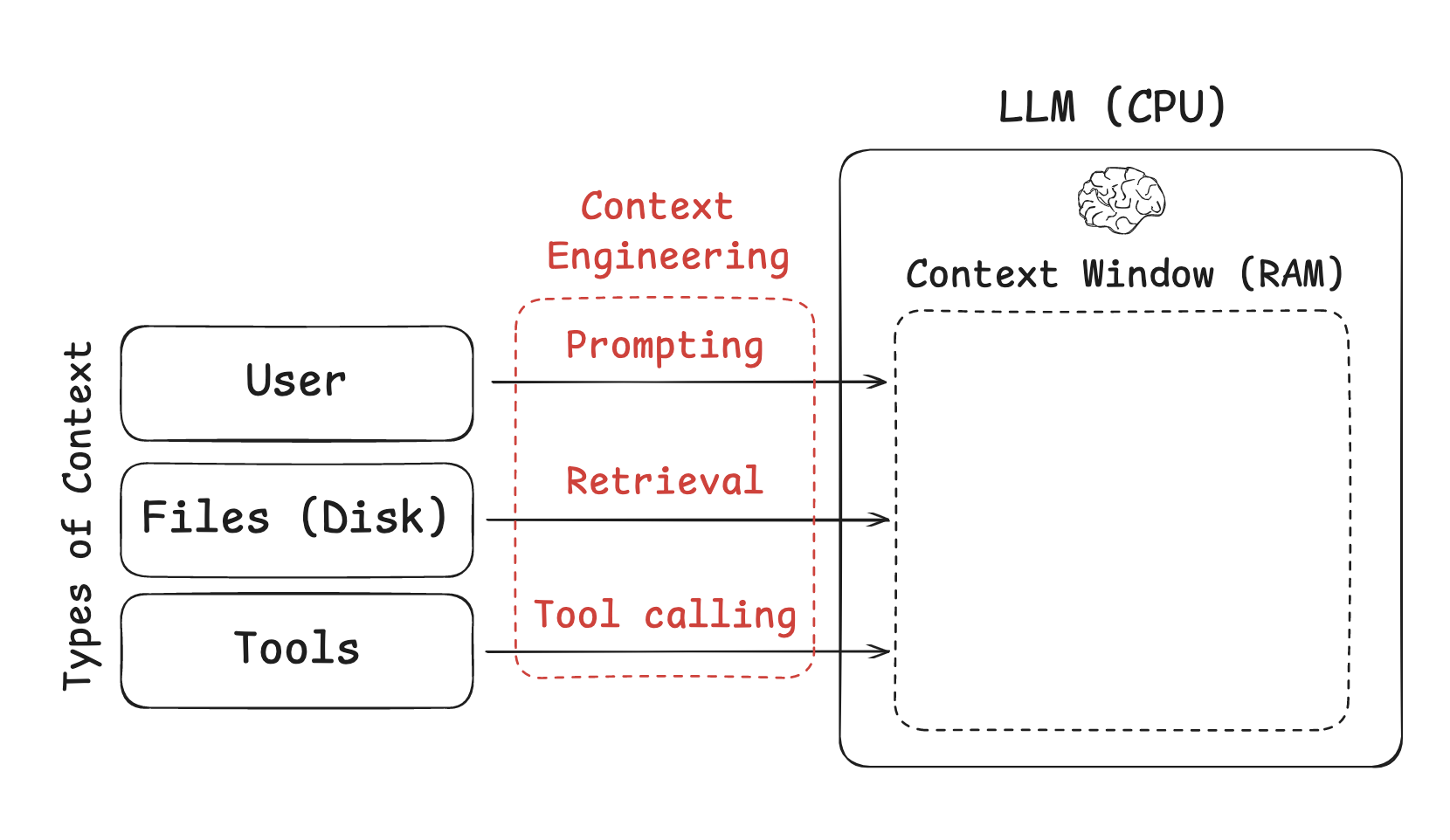

TL;DR: Agents need context to perform tasks. Context engineering is the art and science of filling the context window with just the right information at each step.

Context Engineering

LLMs are like a new kind of operating system. The LLM is the CPU, and its context window is the RAM.

Context engineering is the "...delicate art and science of filling the context window with just the right information for the next step." - Andrej Karpathy

Types of Context

Instructions – prompts, memories, few-shot examples, tool descriptions, etc.Knowledge – facts, memories, etc.Tools – feedback from tool calls.

Context Engineering for Agents

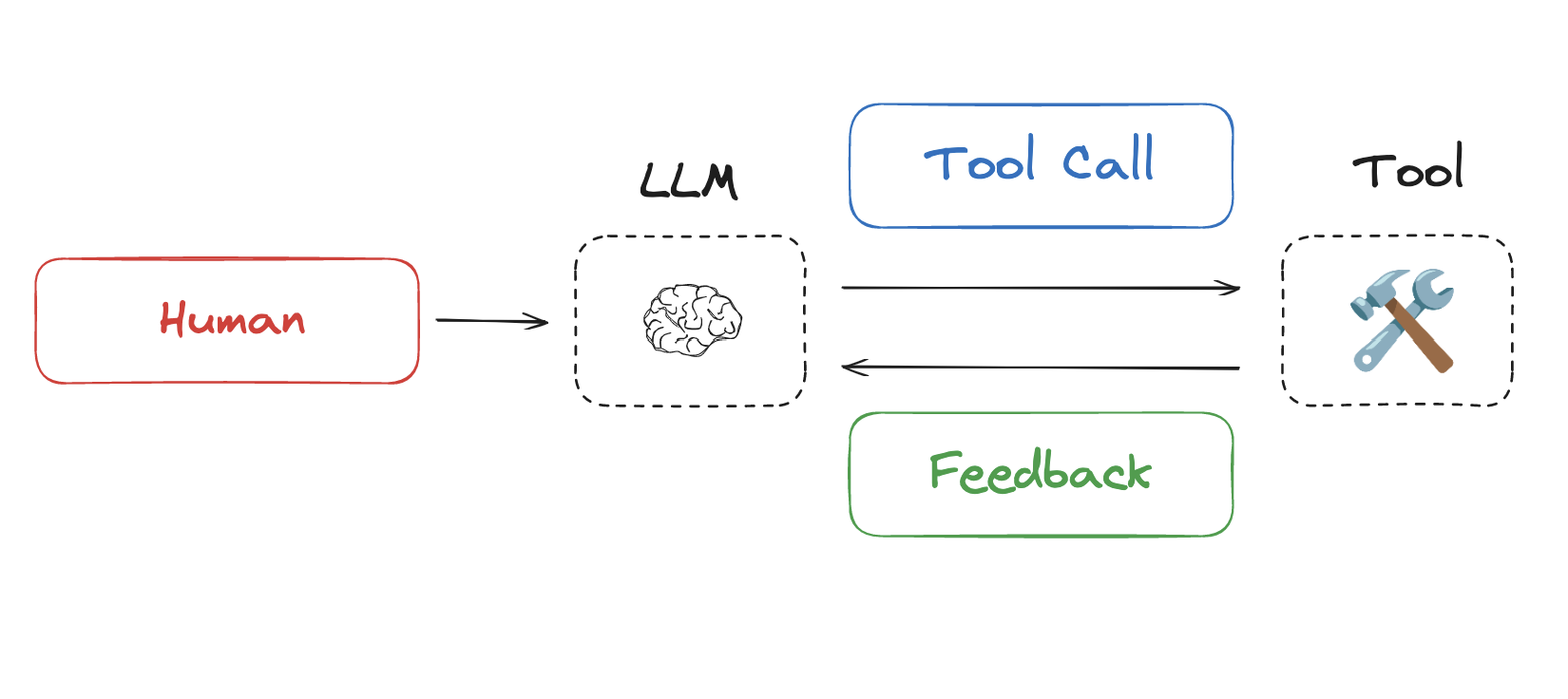

Agents interleave LLM invocations and tool calls for long-running tasks.

Problems with Long Context

Context Poisoning: Hallucinations in context.

Context Distraction: Context overwhelms training.

Context Confusion: Superfluous context influences response.

Context Clash: Parts of context disagree.

"Context engineering is effectively the #1 job of engineers building AI agents." - Cognition

Four Strategies for Context Engineering

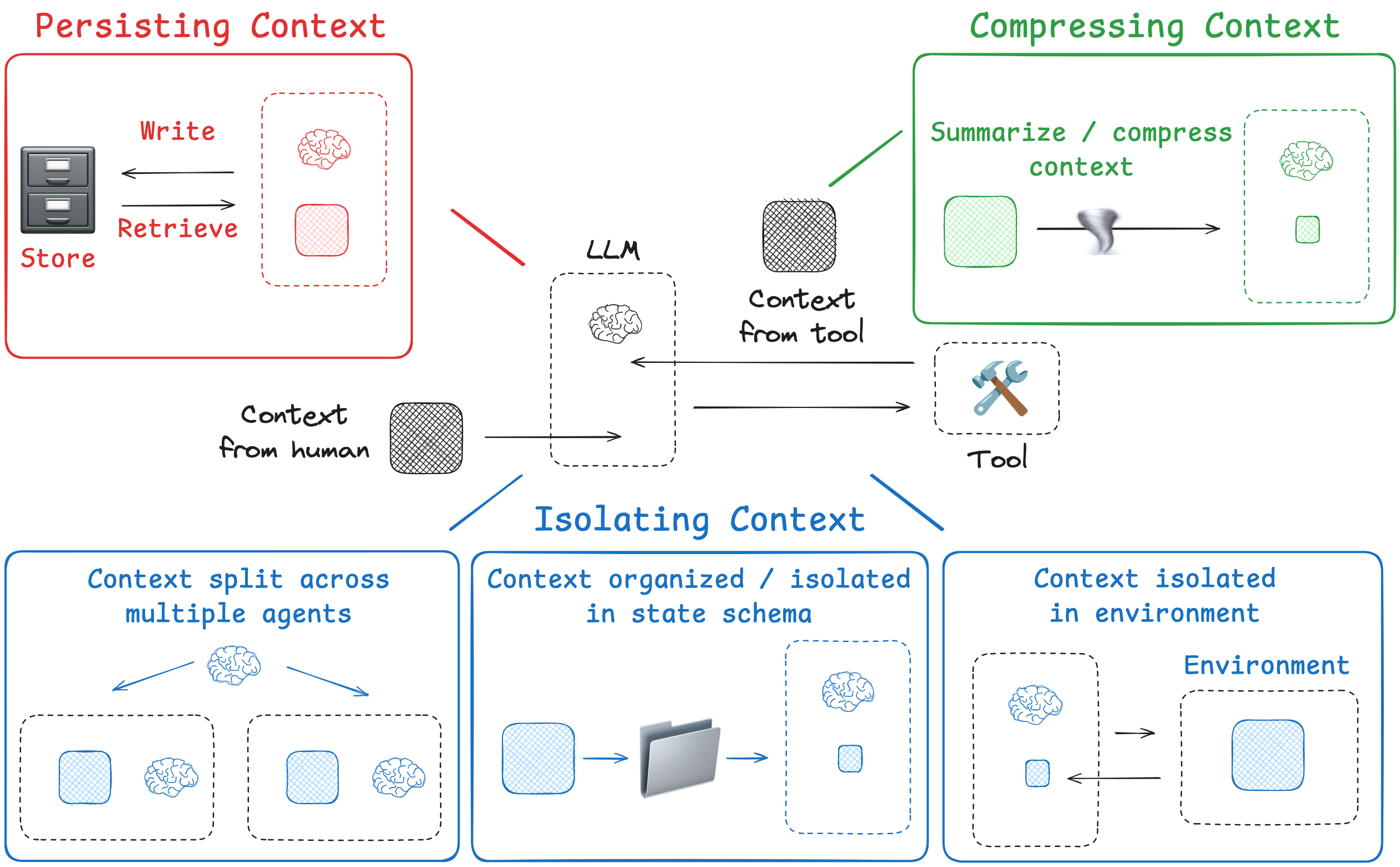

Write Context

Select Context

Compress Context

Isolate Context

Write Context

Saving context outside the context window to help an agent perform a task.

Scratchpads

Agents take notes and save information externally, similar to humans.

Example: Anthropic's multi-agent researcher saves plans to memory to avoid truncation.

Write Context (Cont.)

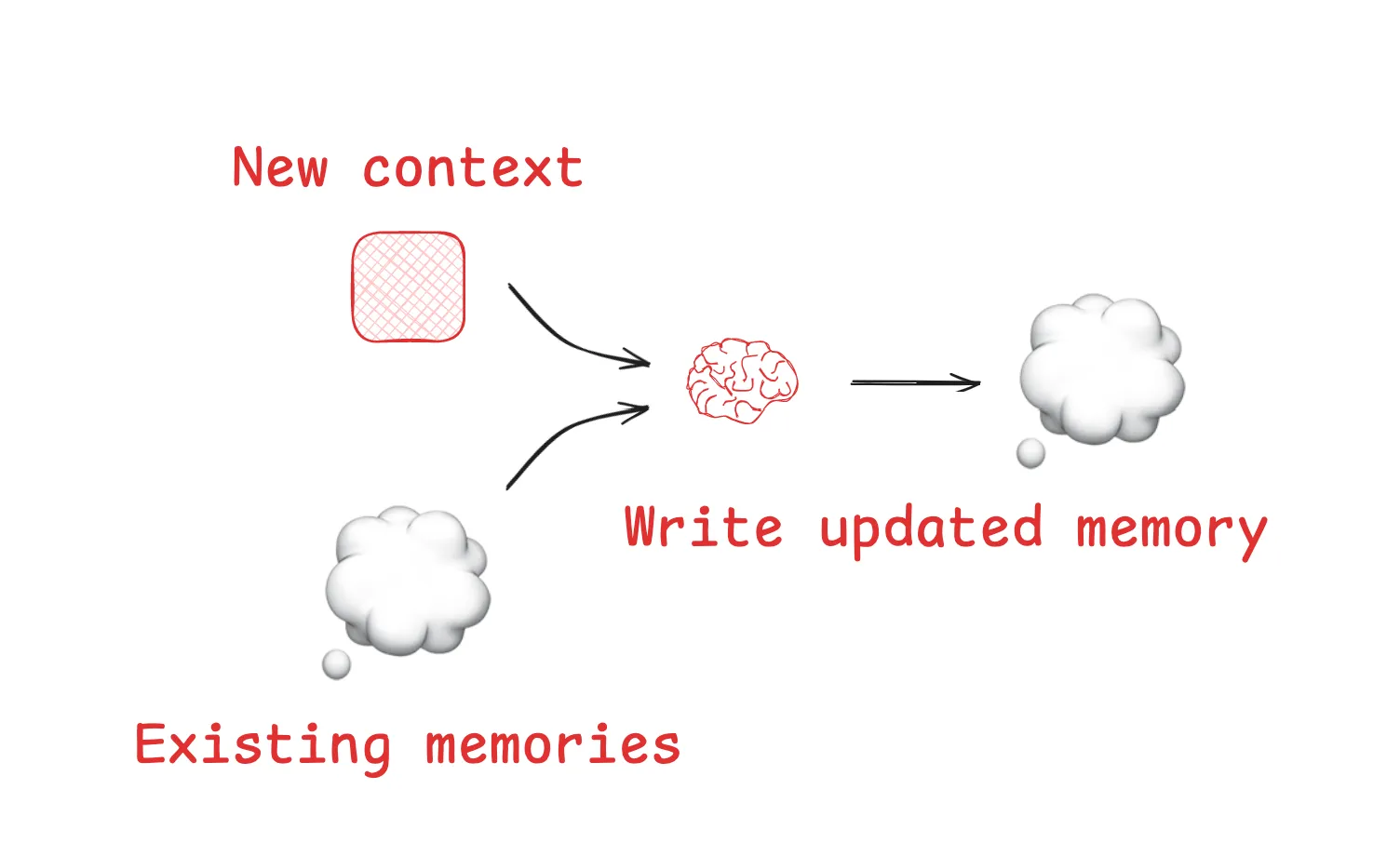

Memories

Agents remember things across many sessions.

Examples: Reflexion, Generative Agents, ChatGPT, Cursor, Windsurf.

Select Context

Pulling context into the context window to help an agent perform a task.

Scratchpad

Agents read from scratchpads via tool calls or state exposure.

Select Context (Cont.)

Memories

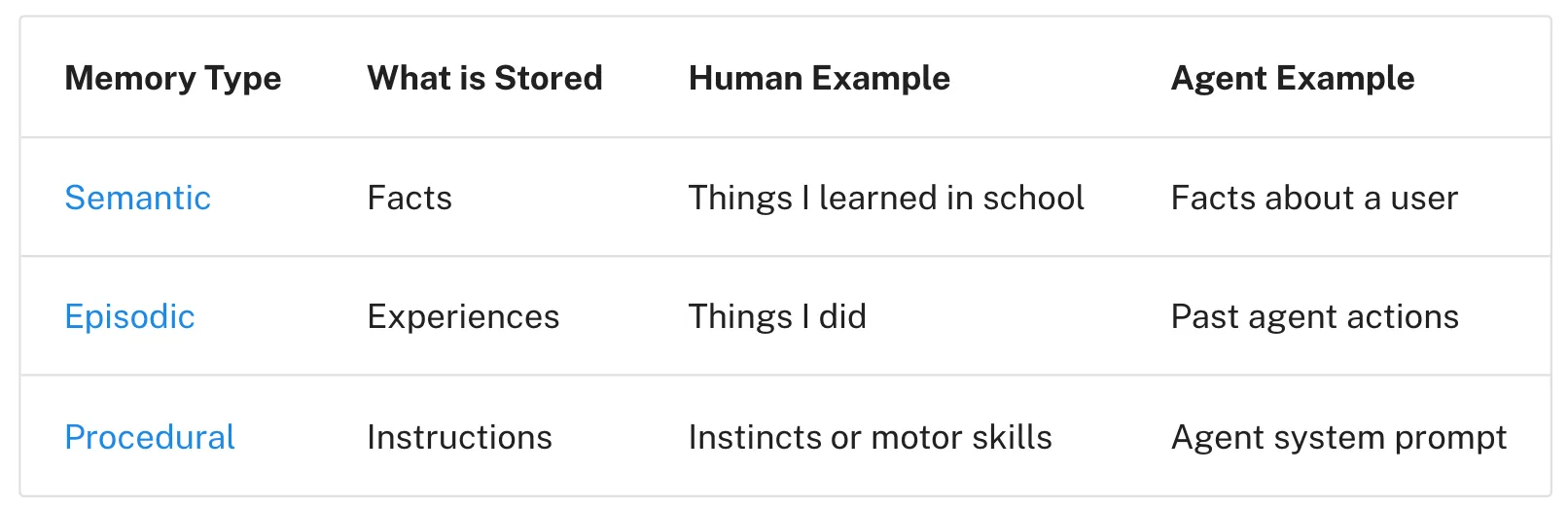

Agents select relevant memories: episodic, procedural, or semantic.

Challenges: Ensuring relevance and avoiding undesired retrieval.

Select Context (Cont.)

Tools

RAG applied to tool descriptions improves tool selection accuracy.

Knowledge

RAG is central to context engineering, especially in code agents.

Compress Context

Retaining only the tokens required to perform a task.

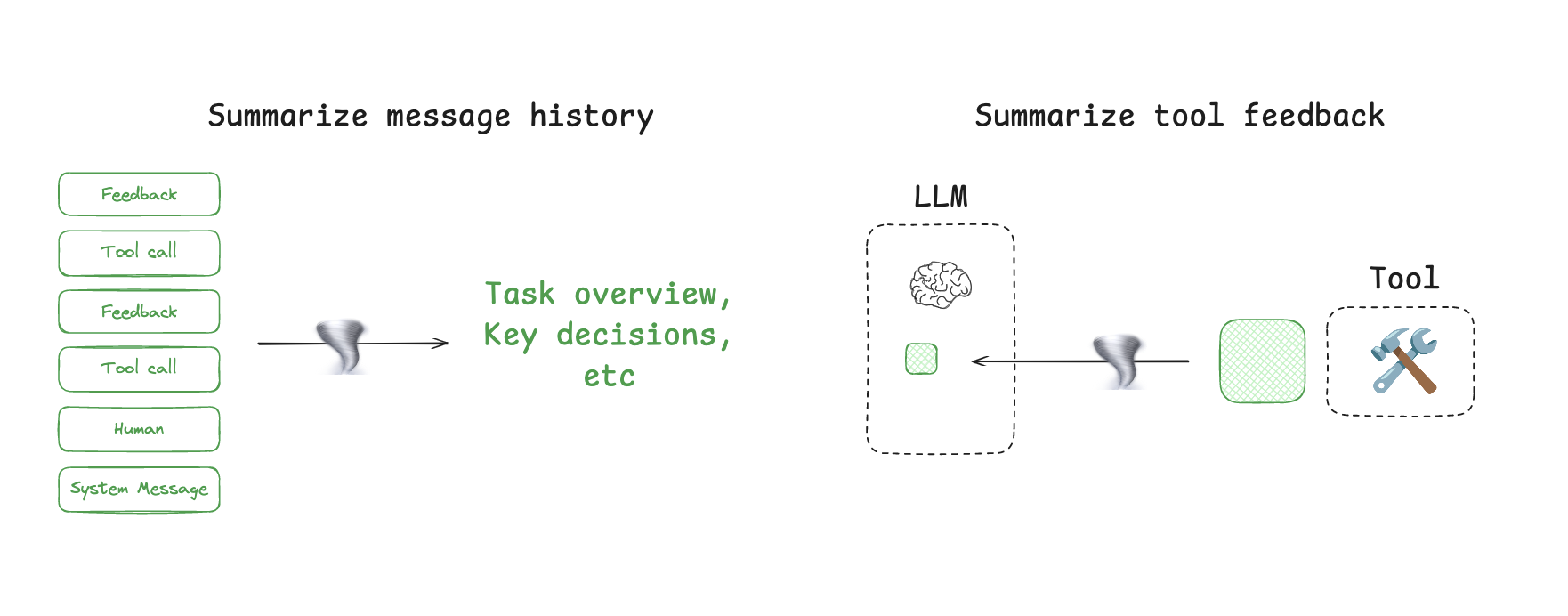

Context Summarization

Summarize agent interactions to manage token use. Example: Claude Code auto-compact.

Compress Context (Cont.)

Context Trimming

Filter or prune context using heuristics or trained models.

Example: Removing older messages or using Provence for QA.

Isolate Context

Splitting context up to help an agent perform a task.

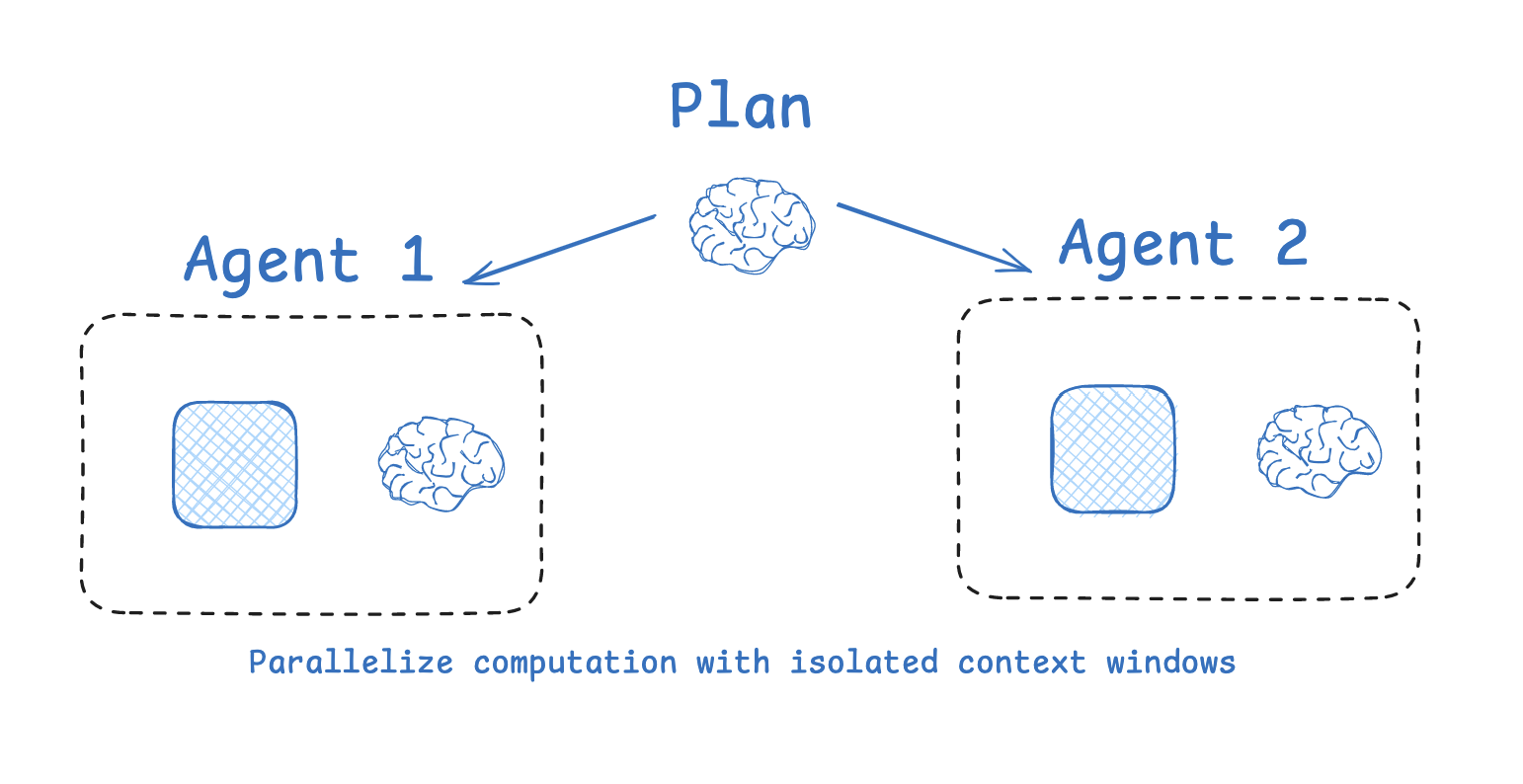

Multi-agent

Split context across sub-agents for separation of concerns.

Isolate Context (Cont.)

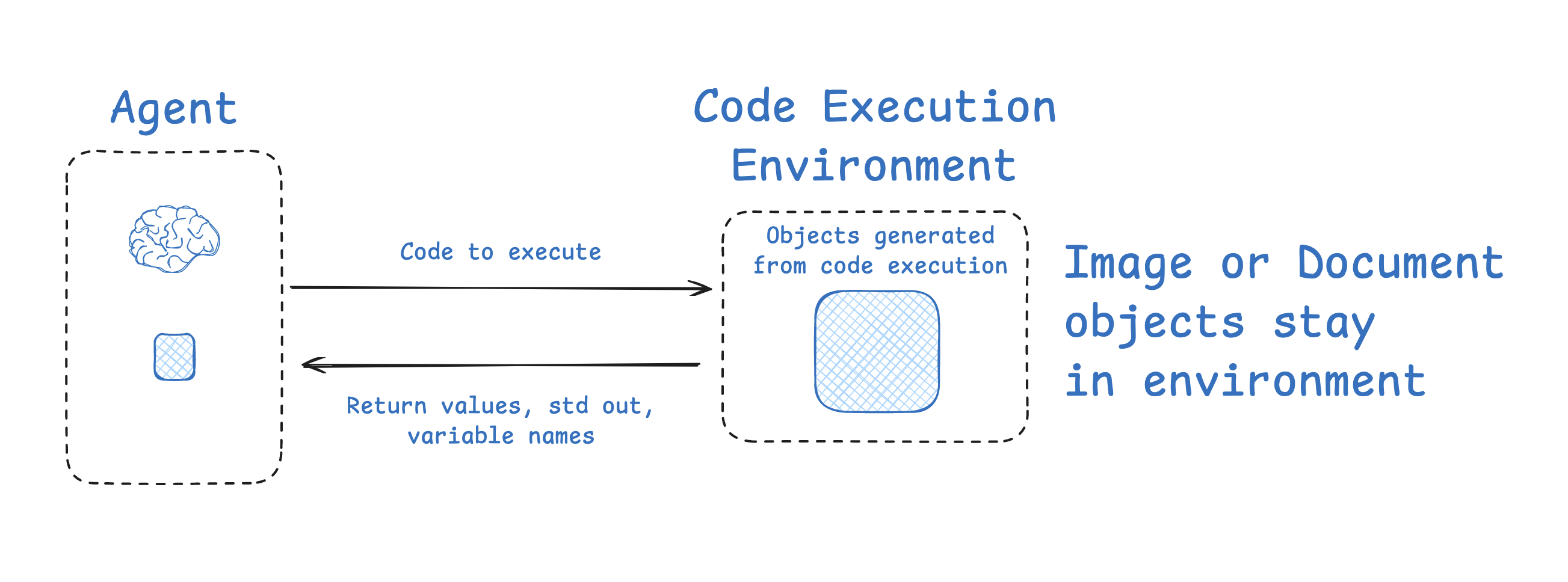

Context Isolation with Environments

Use sandboxes (e.g., CodeAgent) to isolate token-heavy objects.

Isolate Context (Cont.)

State

Runtime state objects isolate context with schema design.

Example: Expose only necessary fields to the LLM each turn.

Conclusion

Context engineering strategies:

Write : Save context outside the window.Select : Pull context into the window.Compress : Retain only necessary tokens.Isolate : Split context across agents or environments.

Understanding these patterns is key to building effective agents.

Previous

Next

Save

Slide 1 of 17